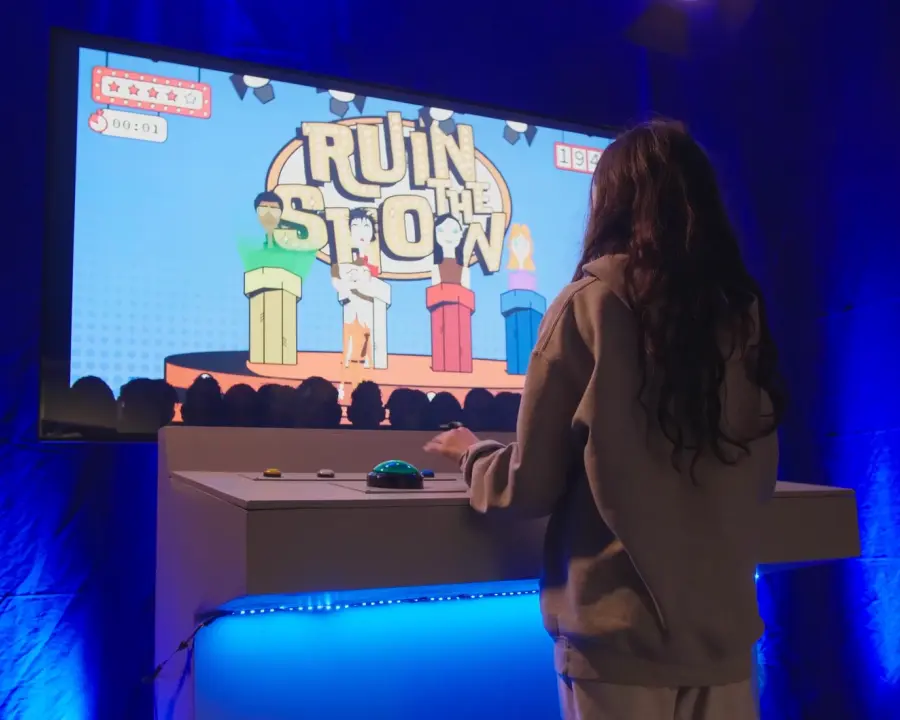

Our approach centered on creating an experience that was both engaging and unsettling. By limiting interaction to facial movements, we forced users to engage with technology in an unconventional way.

Immersive

Interactive

Installation

TouchDesigner

MediaPipe

VCV Rack 2